How to Implement Automic Automation Processing of Real-Time Serverless Events

by: Tobias Stanzel

Before You Start

The content you will require is held in a Github repository, you can access it here.

It contains:

- Implementation Guide (this document)

- An Automic extract of the Automation Engine objects you will require

- Source for the Lambda function itself

To create this in your own environment you will need:

AWS

- S3 bucket that you will use to upload files

- KMS to create an encryption key and assign the Lambda function role as a user

- Lambda to deploy and configure the Automic Rest Api function

Automic Automation

- Automic Automation v12.3 with REST interface accessible from the internet

Required Steps

- Prepare Automic to be able to receive the Event via rest

- Create/Choose an S3 Bucket

- Create Lambda Function based on template and set it up

- Make sure the password is encrypted

- It's Ready

Below, I detail the individual actions you need to take.

Serverless App: Automic Automation REST API

To make it easy for you to use this integration, I published a small Function to AWS Lambda that can be easily configured to send any AWS Event in Lambda as a JSON payload to the Automic Automation REST API.

Automic Automation Engine: Objects to process S3 event

Let’s start by creating the required objects to receive the S3 JSON event and write it to a static VARA object for further processing in the Automation Engine.

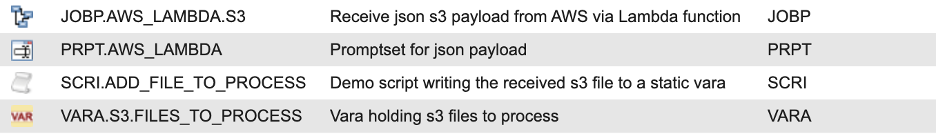

You can use the provided export(link!) which contains these objects:

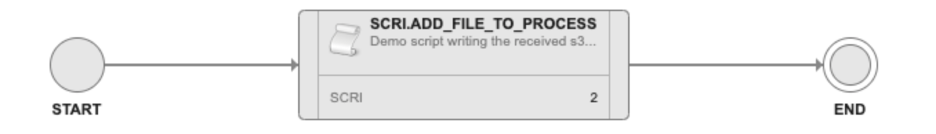

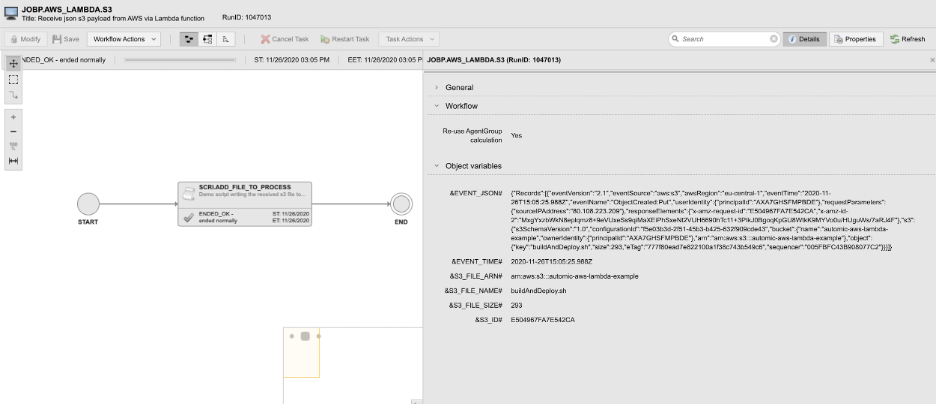

The workflow JOBP.AWS_LAMBDA.S3 is very simple, it uses the Promptset PRPT.AWS_LAMBDA which only has one parameter &EVENT_JSON# to receive the JSON payload

and one script SCRI.ADD_FILE_TO_PROCESS that will parse the JSON into AE variable

and writes the file to the static Vara VARA.S3.FILES_TO_PROCESS for further processing.

The test_payload.json file contains a JSON payload for an S3 put request to a bucket that can be used to test the workflow.

AWS S3 bucket

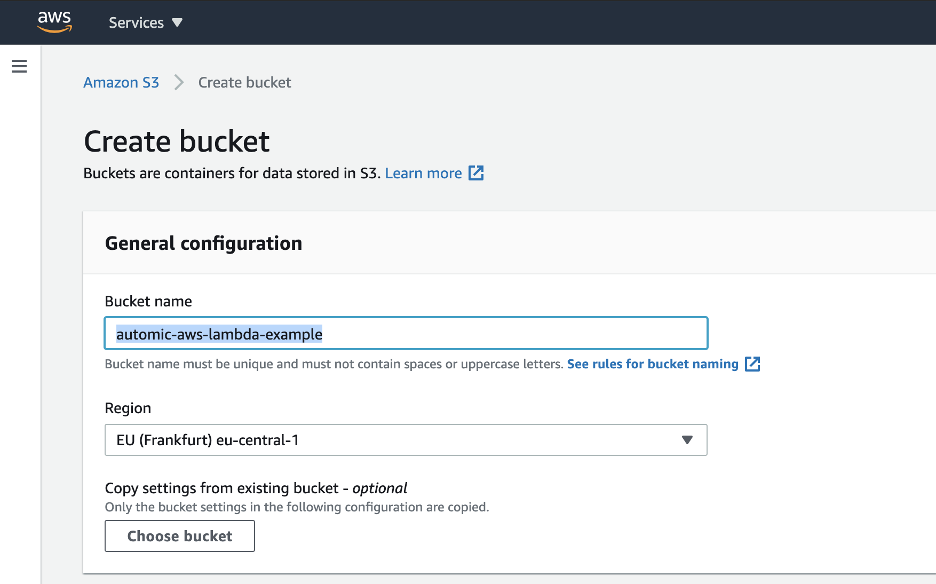

Choose a region where you want to create your example, here I am using EU Frankfurt (eu-central-1), and make sure you create all required resources in this region.

First, we need to create an S3 bucket which we will use for this demo.

I called it “automic-aws-lambda-example” if you choose another name, make sure to also replace this in the Lambda function we will create

AWS Lambda Function and Encryption Key

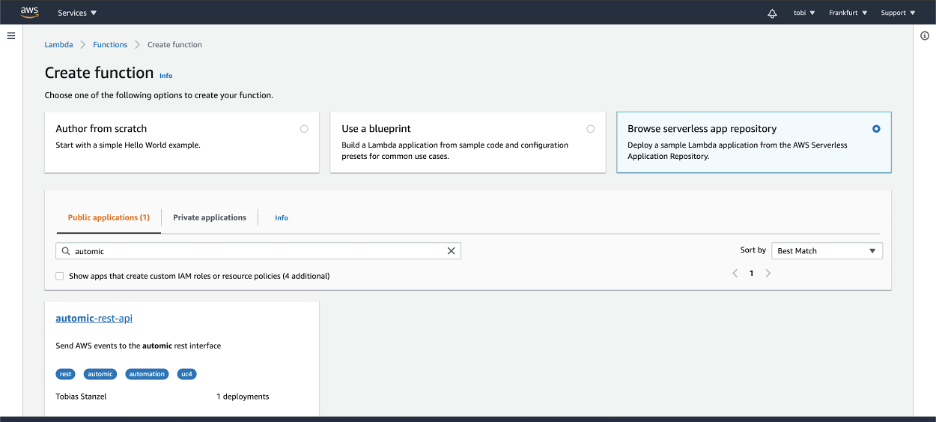

Next, we will create an AWS Lambda function, choose “Browse serverless app repository” and search for “automic”

Select autiomic-rest-api and “Deploy” to create the function

Select autiomic-rest-api and “Deploy” to create the function

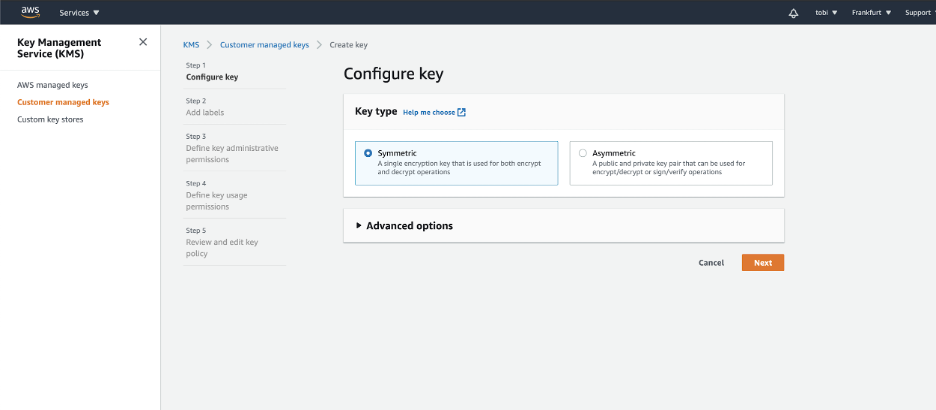

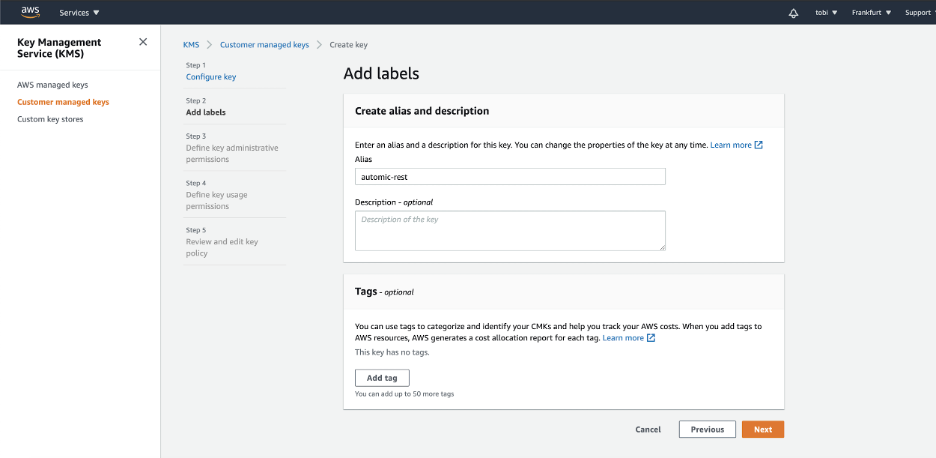

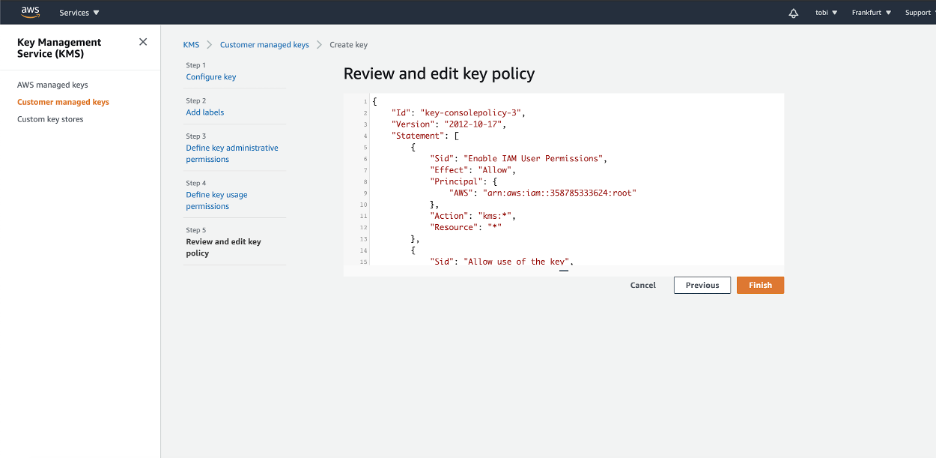

In order to use the encryption for the password field, we need to create or use an encryption key in the Key Management Service (KMS)

Use “Symetric” and click “Next”

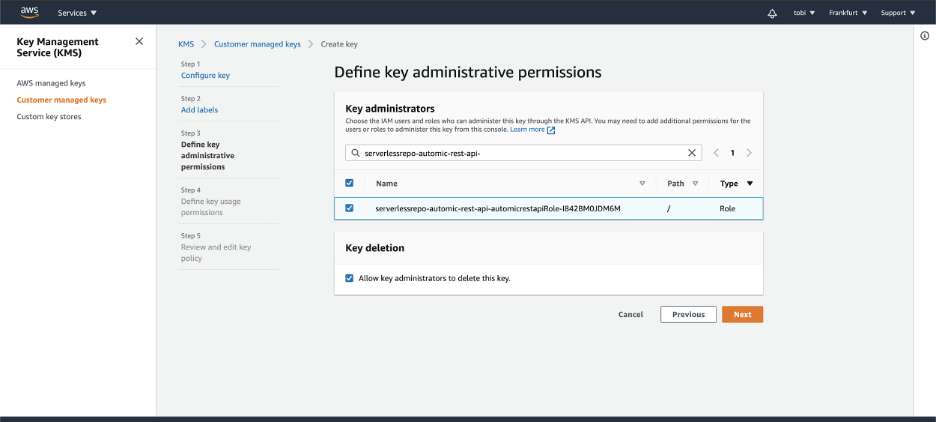

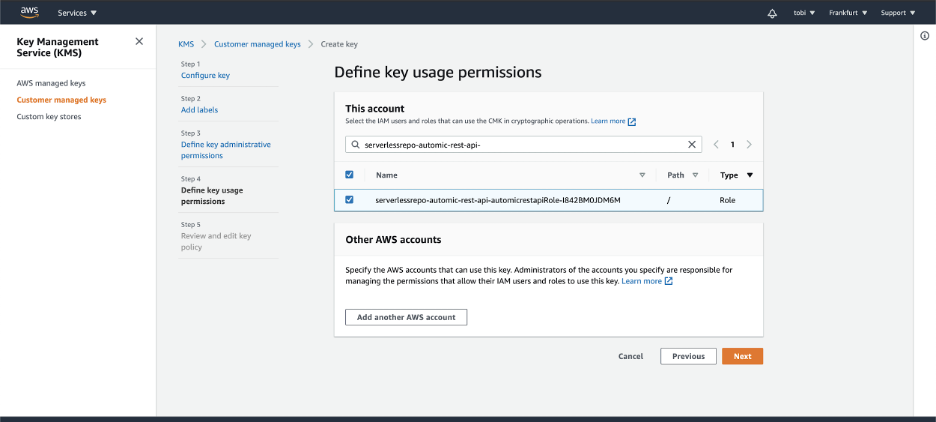

Now we need to choose administrative and usage permissions.

AWS created a dedicated account for our Lambda function, so we need to assign that here in step 3 and 4. The name of the role will start with “serverlessrepo-automic-rest-api-”

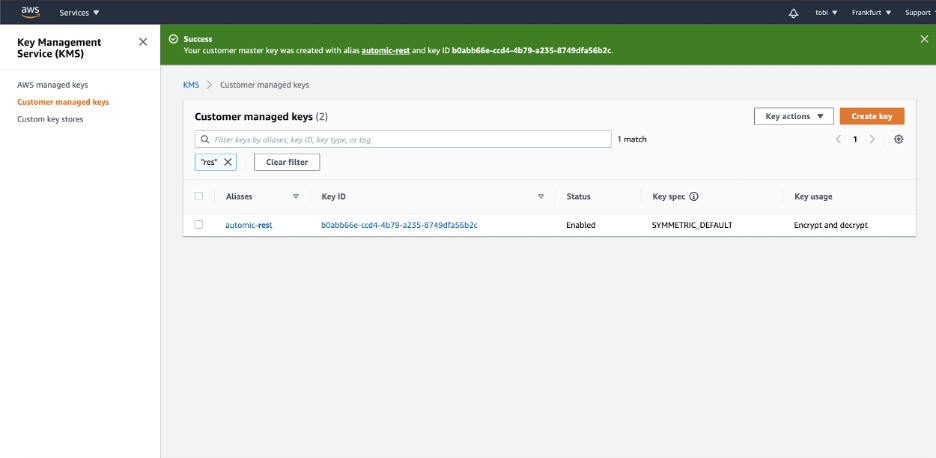

Create the key by clicking “Finish”, you should see something like this

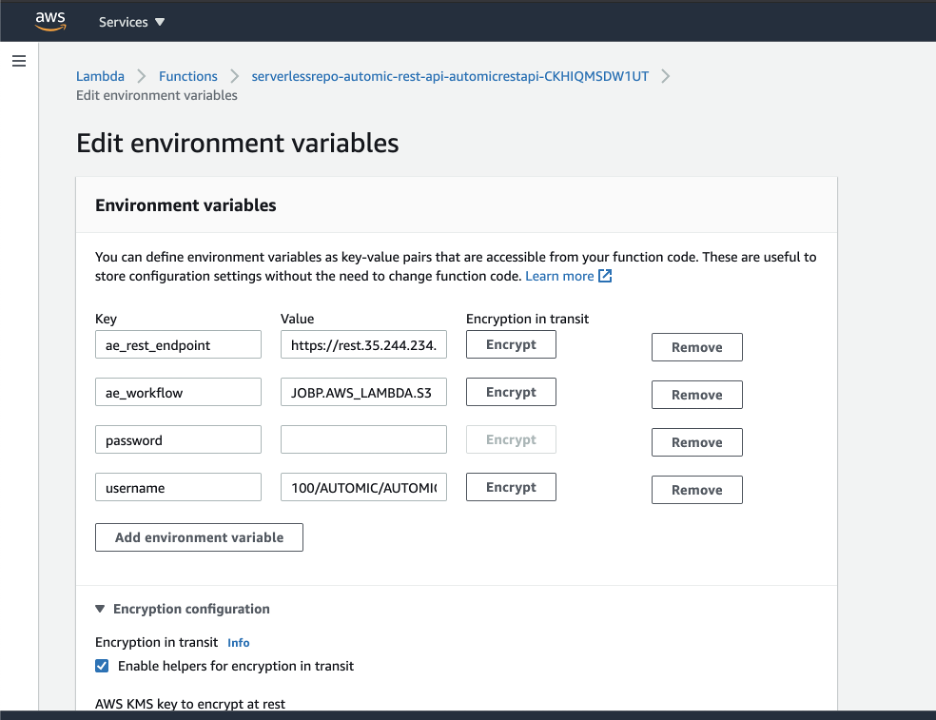

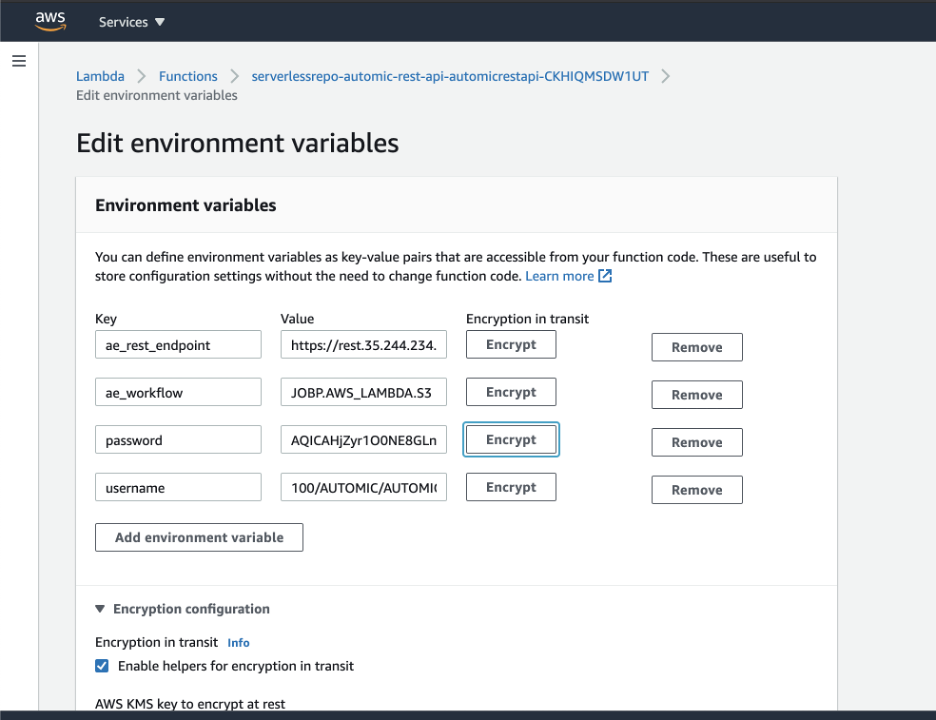

You can go back to your AWS Lambda function now and navigate to “Latest Configuration -> Environment” and Edit the environment variables.

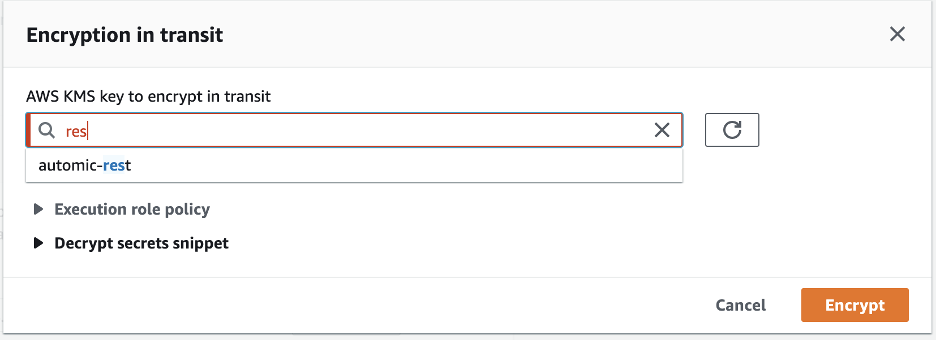

Choose “Enable helpers for encryption in transit”, enter the password and only on the password field press “Encrypt” and select the key we just created

Now the password is encrypted and not in plain text anymore

Save the Environment variables.

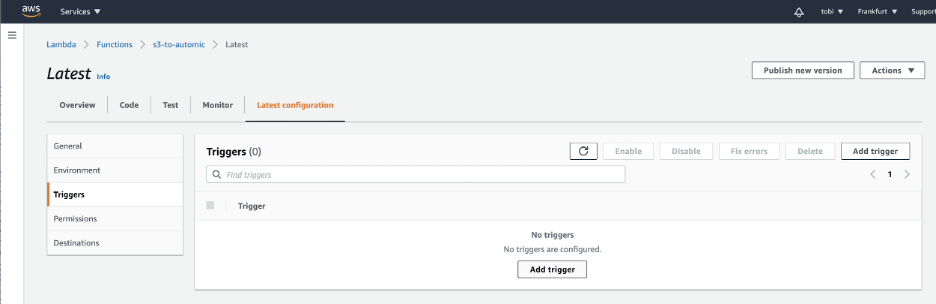

Now we only need to configure the trigger for this function.

Navigate to “Latest configuration -> Trigger” and click “Add trigger”

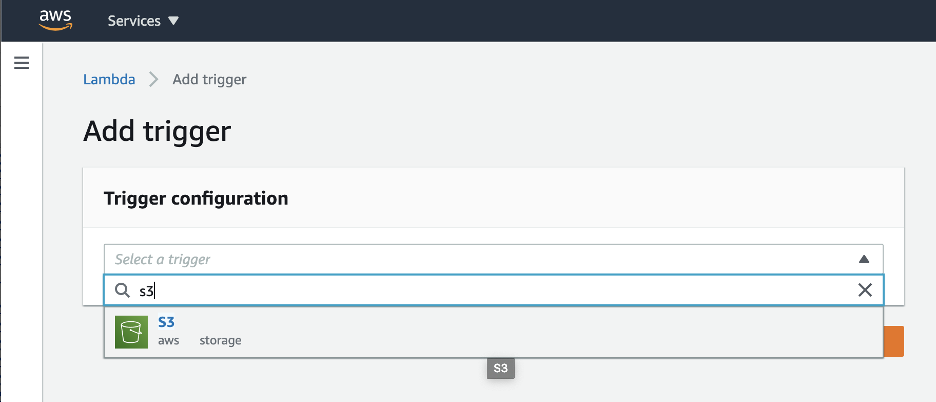

Choose “S3”

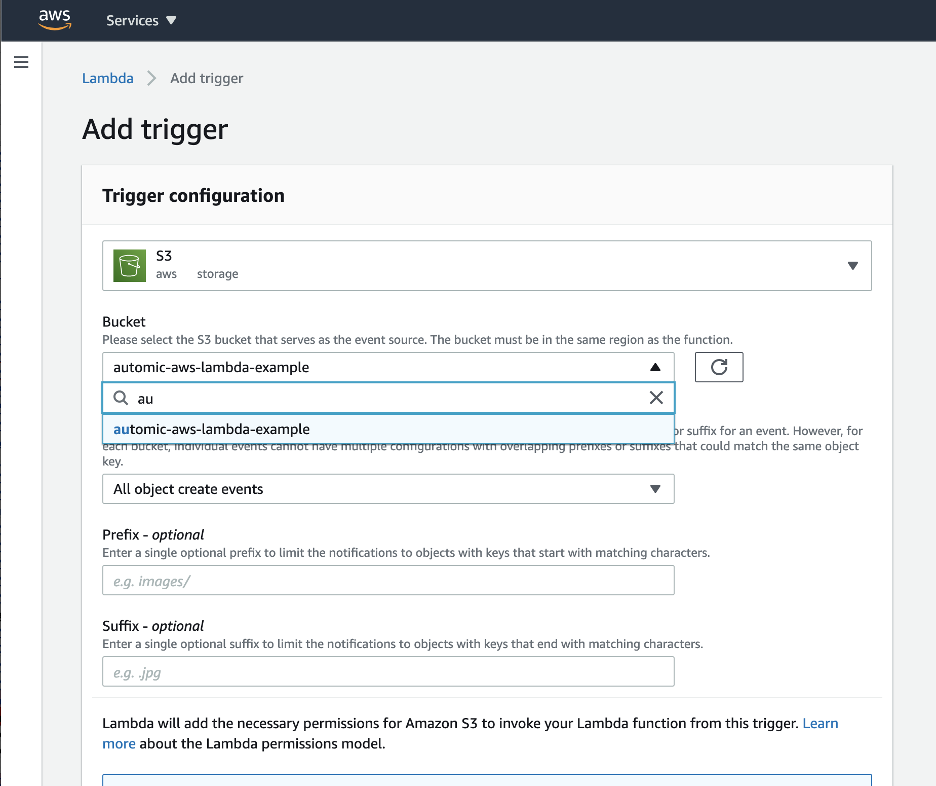

And now select the S3 bucket we created before, I did not further restrict the event definition but if you want you can do so according to your use case.

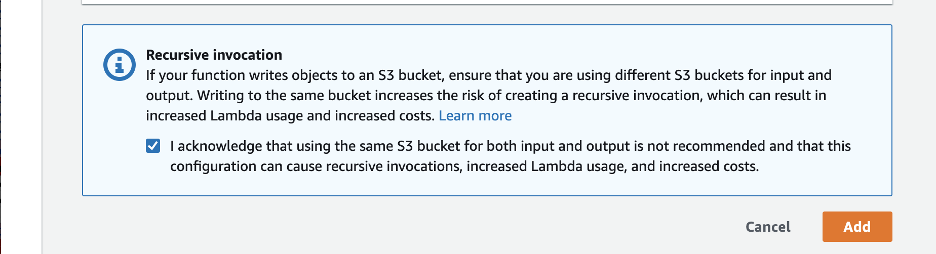

Good hint by AWS ;) Don’t write to the same S3 bucket or you will end up in the recursive hell and consume a lot of compute resources!

As soon as the trigger is active you will get a REST call to the Automic REST interface for every file that is uploaded to the S3 Bucket.

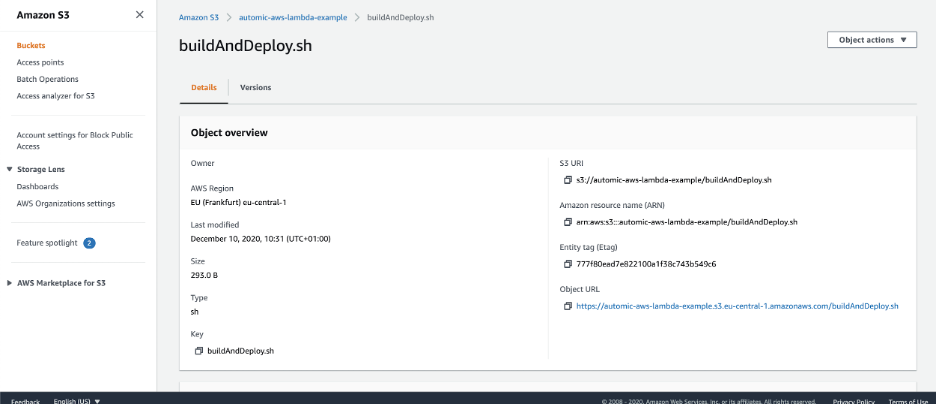

Uploaded file to S3

The workflow triggered in the Automic Automation Engine

You can see the mapped properties from the S3 event in the workflow!